Source Verification

Early detection of hardware issues in Hedge 18.3

Early detection of hardware issues in Hedge 18.3

There has been a shift, lately. Back in the day, checksum verification was introduced because of the rapid decaying quality of storage. The older a hard disk became, the more write errors would occur. But today, backups are no longer the weakest link in production. HDDs have become so reliable — eight years of working-life is already on the horizon — that the hunger for terabytes will for the first time outpace lifespan. Soon, you’ll retire a drive not because it failed, but because it’s just too small.

Instead, sources have become the weakest link. Not the media itself, but that what is used to get data off of it. Between what is on the card and what is received by the host is a long and winding road, controlled by readers and peripherals like hubs and docks. Unfortunately, these are far from infallible. Worse even, we see their error rate going up. We think two things are causing this increase: miniaturization, and USB-C.

Before going into the why let’s talk about how to fix it.

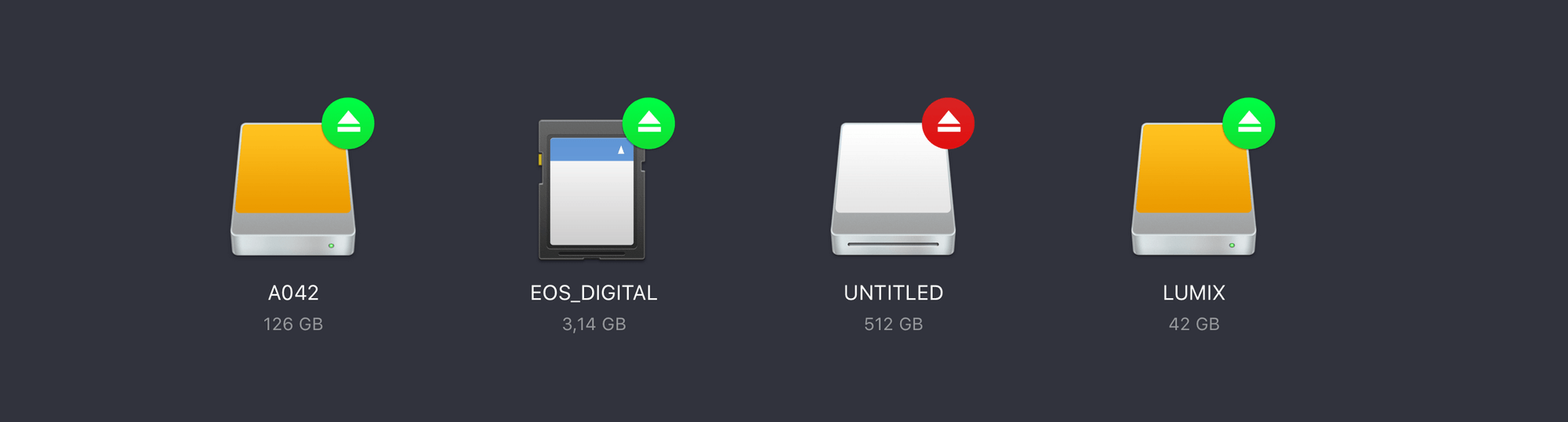

Short version — enable Checkpoint in Hedge 18.3’s preferences:

Long(er) version: keep reading 📖

Source Integrity

When offloading, copies are verified against the source. Transfer errors are all caught, and you move on to the next source. But there’s one big caveat: it assumes the source always spits out its bytes perfectly. In reality, this is not the case; heat, dust and wonky hardware can cause hiccups, resulting in something that differs from what is actually on the source.

If a read error happens, it propagates; incorrect data is presented to the Mac or PC, checksums are made from the wrong data, and it’s written to all destinations — which then checks out nicely with the checksum.

Fortunately, there is a way to detect this kind of errors, thanks to the difference in nature between read and write errors. Write errors leave evidence: corrupted bits can be sniffed out by comparing them with other copies (and in the case of Hedge, repaired.)

Read errors, on the other hand, are not recoverable. These errors are random and caused by intermediate electronics instead of drive issues. Rereading that file will give a different result, and thus raise a red flag that something’s amiss on a hardware level. There’s no way of telling which read is the correct one, so there’s only one solution: Full Stop.

We call this source integrity process Checkpoint. Sounds familiar? That’s because it is — it’s identical to our verification app Checkpoint, but on speed.

Checkpoint Charlie

Reading twice from a drive luckily doesn’t take twice as long. Since a checksum is only taking into account the file’s contents, all the overhead of creating file structures, checking permissions, attributes and more can be skipped, leaving a barebone read stream. But still, it takes precious time. That’s why we’ve smartened up Checkpoint:

Early Detection — The goal here is to detect hardware issues, so it’s best to know as early as possible. Instead of verifying a source after the entire copy finishes, Checkpoint works on a file per file basis. Even if just the last file has an issue, this way you’ll still save time.

MHL Awareness — Checksums are costly. It takes quite some CPU cycles to calculate them, so it would be a shame having to redo that over and over again. What if your source is not a camera card, but a travel drive? If made by professional DITs, it’s likely to have one or more Media Hash Lists, containing the original checksum. Keenan Mock of Light Iron dubbed this the Hero Checksum:

If you have a drive with MHLs onboard for all files, Checkpoint takes zero extra time. That’s quite cheap for the added benefit of automatically checking your hardware, so we advise to always use Checkpoint.

Honey, I shrunk the cards

As said, two things are causing the increasing error rate: miniaturization and USB-C. Since both are not bad tech at all, let’s have a look at why this is happening.

Technology always miniaturizes, whether it’s a CPU or storage. Capacity increases, and thus the footprint of storage becomes smaller. It has lead to great form factors for cameras, drones and action cams, but physics didn’t join the party; the smaller something is, the less capacity it has to dissipate heat. Heat is precisely that what makes storage unreliable, and due to Moore’s law it's exponential.

Until recently, only situations like shooting in the jungle or desert would require you think about heat, but nowadays it’s different: shooting Full HD proxies on an Inspire drone’s MicroSD, which then gets offloaded with a Surface Book’s integrated reader, is not a too far-fetched situation, even for professionals.

Think that’s not you? Here are three more situations, maybe one of ‘m rings a bell 😉

The Wonky Dock

No, The Wonky Dock is not a fancy restaurant. It’s what we’ve dubbed USB-C docks that attach to the side of a MacBook Pro. With a brilliant form-factor, and matching price, they’re awesome for daily life. But offloading media cards isn’t everyday life. Those units’ chipsets can handle the occasional SD and USB transfer, but not continuous streams pros are used to. Every bit transferred creates heat, and these docks don’t dissipate it well.

CFAST

It’s not always a case of pay peanuts, get monkeys: high-end CFAST cards have major heat issues too. If you’re lucky, an overheated card won’t mount or will eject during transfer. But if the card manages to pull through, there’s a good chance that the bits and bytes it spat out aren’t the right ones. Voila, a corrupt clip — without having a way to tell, unless you actually review all clips. But that’s something you of course always do anyway, don’t you? 😉

Power Woes

Bus powered is so convenient, and it became even better with USB-C. Saves you from bringing all those power bricks, and looking for outlets, doesn’t it? Keep this in mind: both Windows and macOS determine if a USB connection is USB3 by the reported power consumption.

So, if you use a hub that is underpowered or a reader with a cable with missing connections, nothing seems wrong until you start putting a load on it. At some point, it runs out of power, and chances are your hub will fallback to USB2. You won’t notice unless you dig into the OSs statistics. If you do see it, it’s because your OS is spontaneously ejecting and immediately remounting your drives, or your transfers slow down to a crawl.

Orange is the new red

Hedge’s previous update introduced Duplicate Detection. As promised, we’d ask for your feedback and refine how it worked.

When starting a transfer into a folder already containing data, Hedge 18.2 looked for duplicates during the transfer. Now, Hedge knows before starting a transfer which files to skip, and the progress bar scales accordingly. If a Source is empty, or already transferred in full, you get a friendly heads-up.

The behavior of the transfer bar changed a bit too: A red transfer bar in Hedge now solely means that a non-recoverable issue occurred: a hardware issue, like a disconnected drive. If you have Checkpoint enabled and it detects problems, that’s a red flag too. Red = hardware issue.

Hedge’s error reporting now also discerns between the data part of a file, and its attributes, permissions, and timestamps. If a file reaches the destination intact, but one of the additional flags could not be read or written, an orange warning is shown. Upon completion of the transfer, you can review these in the Transfer Log, or click on the Warning shown in the transfer. Orange = file issue.

So, that’s Checkpoint in Hedge 18.3. You can update in-app, or download Hedge here: https://hedge.video/download/hedge

PS: A Call To Camera Manufacturers

What if cameras would create checksums as part of the metadata? That would be the true hero checksum.

With the processing power available in modern cameras, and the relative leanness of algorithms like XXH64, creating a checksum of the footage in camera doesn’t sound too far-fetched. Who’s game?